The biggest questions in AI

The frenzy, the FOMO and the excitement around AI has led a lot of us wondering about the present and the future of AI. Is AI is going to change the world? Or are we in an AI bubble?

“Man v Machine” has been a recurring theme in pop-culture, society and technology at large for a long time now. The ability of a machine or a computer to do tasks seeming superhuman to an average human being has captured everyone’s imagination since inception.

When Deep Blue, an IBM supercomputer, beat the world champion Garry Kasparov at chess more than 25 years ago, it unleashed a new wave of hopes and fears around technology and the “machine’s intelligence”. Hopes, that it could one day, do some tasks more efficiently and quickly than a human thus making them better. Fears, that it could do some other tasks more efficiently and quickly than a human, thus making them redundant.

We’ve come a long way from Deep Blue. Artificial Intelligence or AI has made exponential progress over the last two decades and has been increasingly playing a key role in products and applications we use in our daily lives. A lot of it has been abstracted away and is working behind the scenes, away from the user. Recommendations, predictive models, optimisations, automations and other hard computations are now low hanging fruits for AI and that just a few lines of code can get done.

AI has been increasingly getting closer to perfectly interpreting and learning human language. For context, it is one of the most complex computational tasks which differentiates us from the rest of the species. Human language, by design, is very nuanced and unstructured, and can vary quite significantly between people. For a machine, learning the relationships between different words and where they fit in in the context of the sentence had not been as successful before. That, and guessing what words or sentences might come after each sentence is something AI and ML models had not been able to perfect, until now. Human brains do it with maximum efficiency and little computational power. But major improvements in LLMs has allowed AI to pass that hurdle too.

Large Language Model (LLM), an algorithm that can recognise, summarise, translate, predict and generate human language text, has made massive strides in use cases that involve human communication, conversational languages and other complex solutions that need ingesting and learning from a billion or more unstructured data points.

Last year, OpenAI took the world by storm by launching ChatGPT, an AI chatbot built using the GPT-3 LLM. While you’ve seen chatbots on the internet and voice activated products that answer you (Alexa, Siri and the like), ChatGPT is arguably one of the first user facing product that has been trained on more than 170 billion parameters including the entire internet, thus allowing it to answer to anything and everything you ask it in a conversational way. Communicating with a machine like you would with a person, with that flexibility and ease was not experienced by the average human on the internet before. Hence it has spread like wildfire since its launch.

The AI frenzy

The excitement around AI since ChatGPT’s launch is contagious. The hopes and fears seem to have made a comeback. Hopes, that AI is going to change the world and the way we interact with the technology. Fears, that this is how it takes over some tasks we thought we were very good at, and does them 100x better. After all, the GPT model (and LLMs in general) can respond to any query you put forward using the data from the entire internet corpus, write and debug code, summarise long texts, create stories, generate content and so forth.

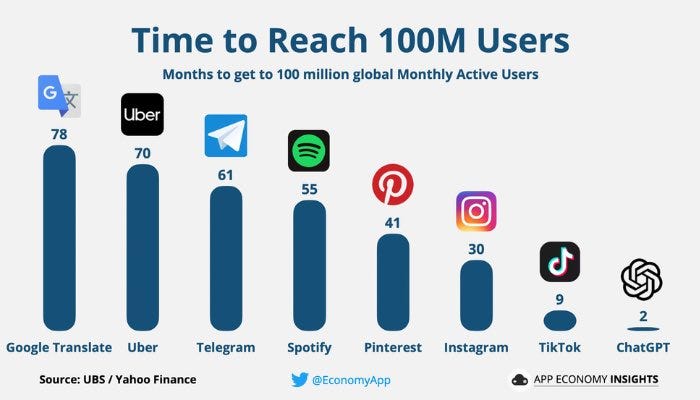

This kind of FOMO around any product or technology is one of its kind. ChatGPT became the fastest consumer app ever to reach 100m active users, by getting there in just 2 months after its official launch.

The number can be misleading, as every new application or product has the existing social networks and their existing user base to leverage the network effects to grow and get viral. But it is still impressive nonetheless.

The excitement around it was so widespread that OpenAI has already launched Pro and Plus versions of the product at $40 and $20 per month respectively to monetise what they’ve sparked. Microsoft was quick to capitalise on it as well, investing $10bn in OpenAI and ensuring it keeps building inside its Azure Cloud infrastructure, also giving Microsoft first rights to commercialise the tech and allowing it to leverage it for its own products.

The frenzy, the FOMO and the excitement around AI has led a lot of us wondering about the present and the future of AI. In this two part series of posts, I will try to group these thoughts into broad questions and try sharing my own stance on them. The hype and excitement attracts both naysayers and optimists, and therefore I feel its important to be fastidious about something as big as this.

Is AI the new technology epoch, and will it change the world forever?

The disruptive nature of text based (ChatGPT) and image based (DALL-E, Stable diffusion etc.) applications makes AI seem like the next epoch in technology. The technology offers new features that users will value, they are simpler and convenient to use and have the potential to bring about a step change in improvements over the existing products.

People in tech and media have hailed AI to be the next big thing as it seems to offer very tangible use cases that can be implemented relatively easily. The products can even be adopted and understood by the average person without any high barriers to learning or upskilling. The argument presented by people in the “Hopes” camp for AI to “change the world” is based on very visible productivity improvements in text based and image based tasks.

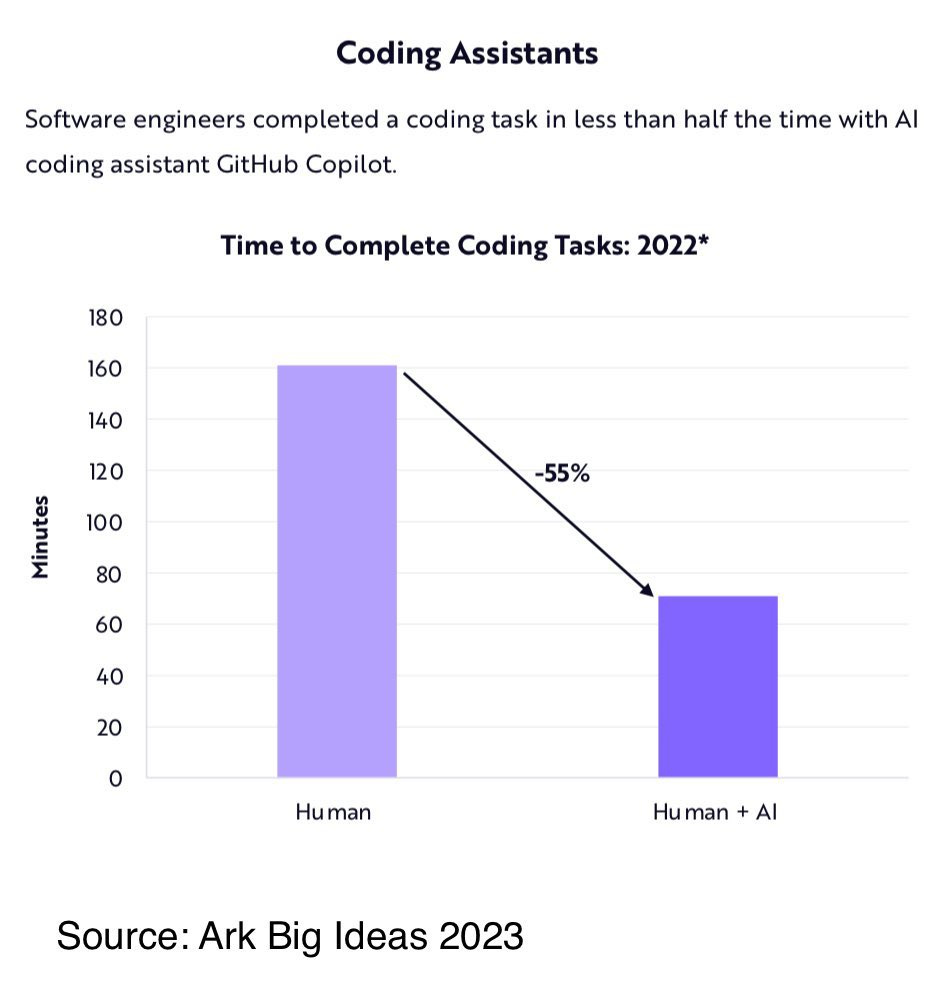

AI is now capable of writing code for programmers. Github, in partnership with OpenAI, has launched its Copilot tool allowing developers to code 55% faster. Imagine Gmail autocomplete, but for coding (it offers more features than that, but its a close analogy).

Microsoft has already introduced AI augmented features in its existing product lineup like Bing (allowing you to search faster with a conversational chatbot), Designer (for faster and more creative designing) and plans to do the same with Office and the rest of its products.

The tech is being put to use by other enterprises and startups as well, with dozens of new and existing products using it to improve user experience and make them more efficient. For instance, Allen & Overy, a global law firm, is introducing an AI chatbot to help its lawyers draft contracts faster. Notion, my note taking app that I use for drafting the blog posts, has added an Ask AI feature, enabling users to write, complete and summarise text.

Snapchat, TikTok and Shopify also plan to include these AI models in their existing feature sets. This is rich evidence of AI capabilities being put to use in areas like sales and marketing, creative roles, drafting, publishing etc. at such an early stage, which is quite promising.

Wonderful. But for now, the productivity gains are mostly restricted to the knowledge worker and in jobs in tech, banking, consulting, law, and other creative fields related to design, art, music etc. Productivity gains (i.e TFP or Total Factor Productivity which in-turn drives GDP growth) are more likely to have a “world changing impact” in the fields of healthcare (diagnosis, detection, drug discovery), manufacturing, construction and other physical service jobs in retail, hospitality, transport etc.

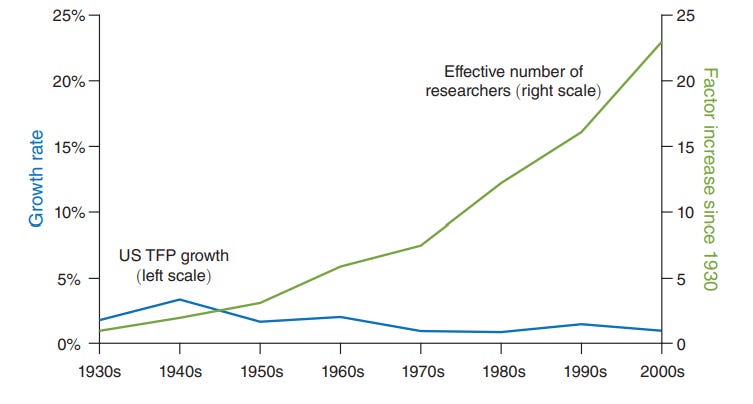

U.S TFP has barely moved and has actually trended down even as the world has become more tech enabled.

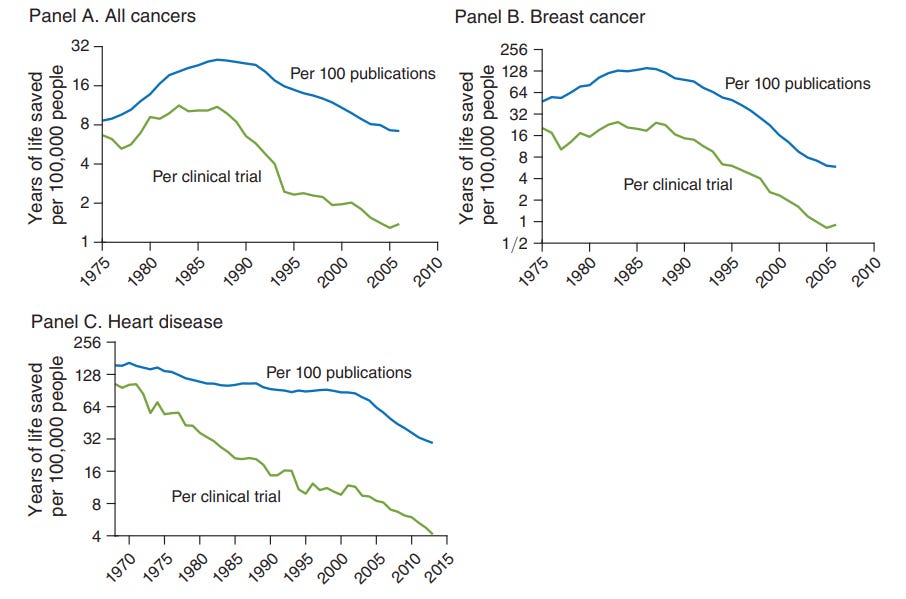

Here’s another example from a recent post in the Klement on Investing newsletter, depicting the decline in research productivity (measured as years of life saved per clinicial trial or research publication) in healthcare. It is quite astonishing.

It also goes on to say that

This trend is not just confined to health care. Similar trends can be seen in agriculture and technology where the famous Moore’s law is increasingly breaking down. Today it takes eighteen times more researchers to double the density of computer chips (and their computing power) than in the 1970s. Everywhere we look we have to invest more and more time and capital to get the same rate of progress as in the past.

Actual use cases and commercial products or business models for these fields are yet to be developed at scale (with no evidence of if they will be built), which suggests there is a long way to go for AI to supercharge productivity at a scale that makes a difference.

Then, people in the “Fears” camp believe that AI is going to take over a lot of jobs and will automate away a whole lot of them, making humans redundant. That is a valid doubt given what we’ve seen what LLMs can already do , and how quickly and effectively these models are being implemented in user facing tools. But, it is becoming increasingly evident that these LLMs are not perfect. Far from it. The chatbots are misinformed and full of biased information, constantly giving out facts that are incorrect in the context of the question asked. They make things up to make it sound like they are actually true (how very human of AI). Here’s a fascinating read where Ben Thompson of Stratechery reveals his experience of chatting to the Bing Chatbot.

What AI is great at is augmenting human skills and making tasks more efficient, allowing them to do a lot more with a lot less time and resources. AI is more like an assistant rather than the master (at least for now) and is likely to be a copilot rather than replace the human altogether.

To make it work for your specific use case, you would need the right type of prompts, so people who get better at using AI enabled tools might replace people who have not learnt how to do that or do not want to.

The new technology epoch has the wind at its back though. How we interact with technology and tech enabled tools is likely to change forever. But “changing the world” might have to wait for a while.

Does it look like an AI bubble fueled by hype and untethered expectations?

The excitement around AI is not restricted to users on the internet only. The markets have taken note and are increasingly redirecting the flow of money towards this space, trying to get in on the hype and get in there first.

In the public markets, stocks with AI in their names have soared as ChatGPT launched and generative AI increasingly gained traction.

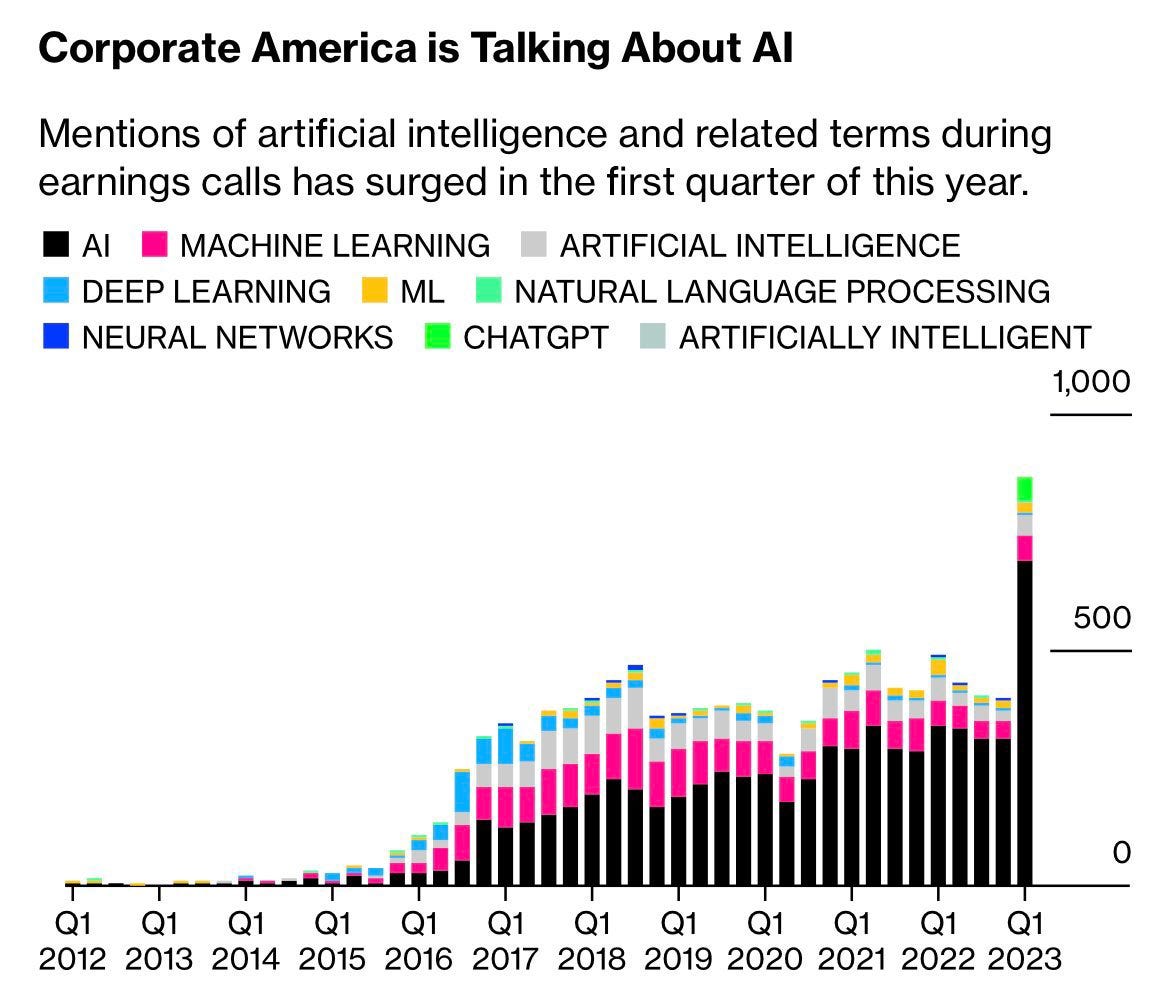

Mentions of AI and ML on quarterly earnings calls are through the roof.

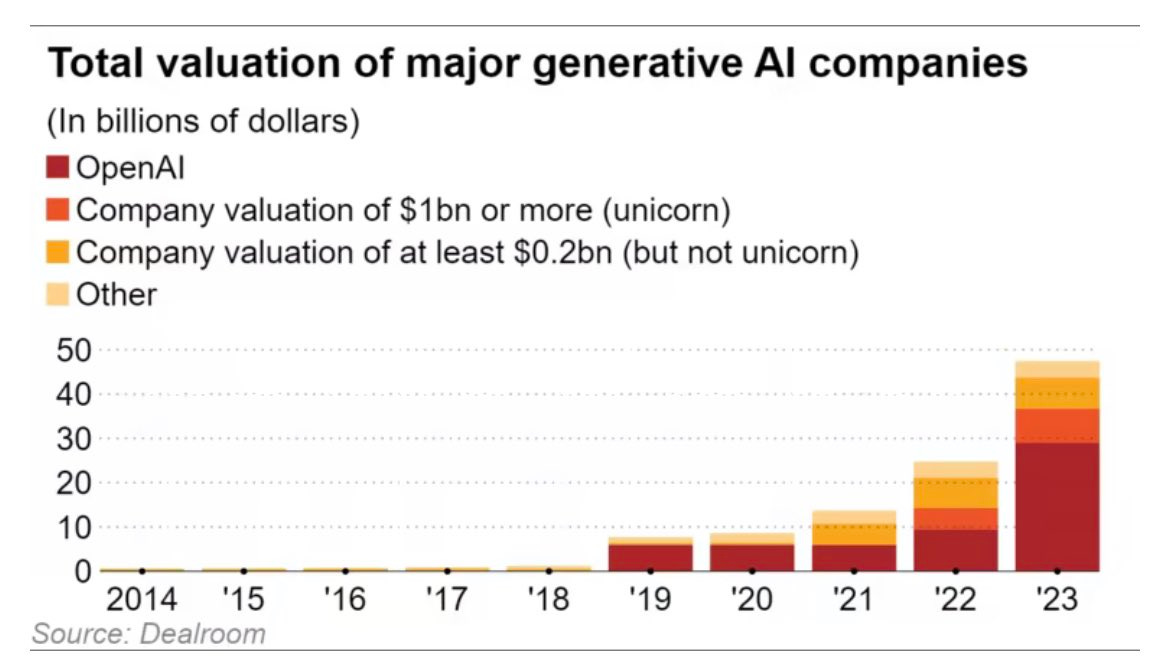

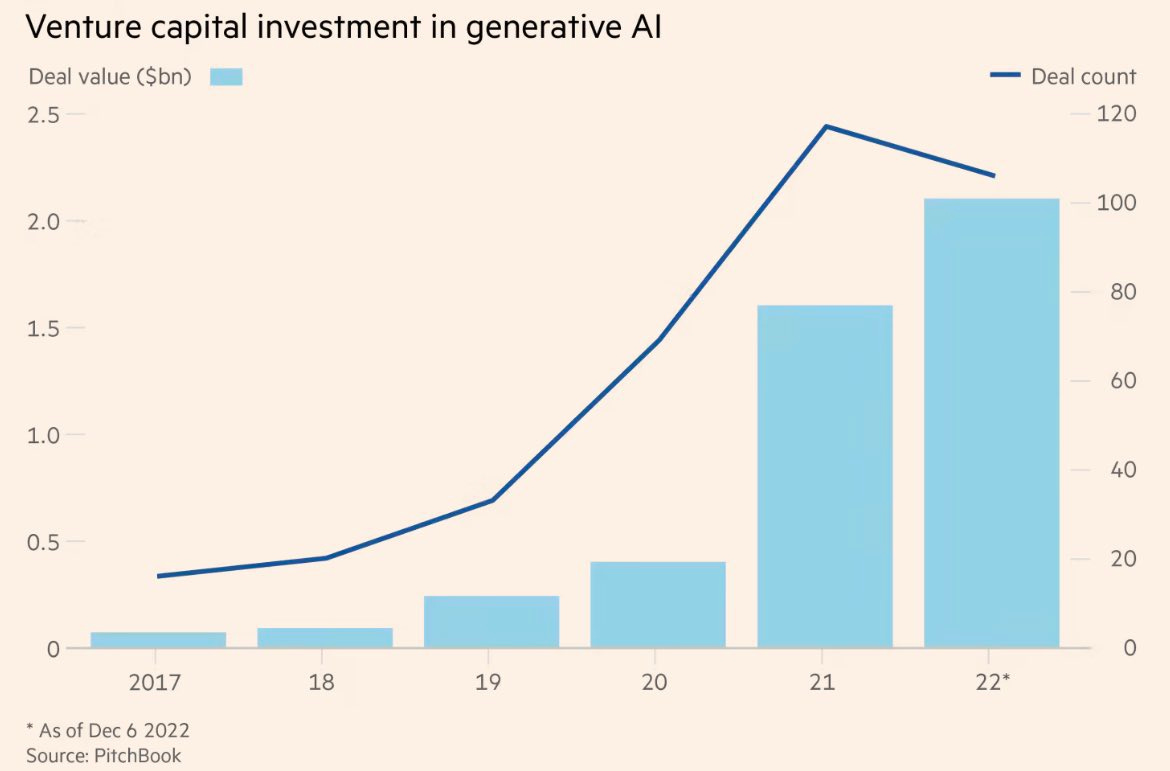

VC’s are pumping in capital at insane valuations, both of which seem to be increasing.

Why does it give me a “Deja Vu” feeling? We’ve seen this story play out time and time again. Most recently with Crypto/Web3, the Metaverse and other hyped up technologies in the past decade.

Here’s an informative tweet thread by Francois Chollet from the Deep Learning team at Google, with some highlights below:

The parallel (with Web3) is in the bubble formation social dynamics, especially in the VC crowd.

The fact that investment is being driven by pure hype, by data-free narratives rather than actual revenue data or first-principles analysis. The circularity of it all -- hype drives investment which drives hype which drives investment. The influx of influencer engagement bait.

Most of all, the way that narratives backed by nothing somehow end up enshrined as self-evident common wisdom simply because they get repeated enough times by enough people. The way everyone starts believing the same canon (especially those who bill themselves as contrarians).

As of now, this looks like money being thrown at the next shiny object that has got everyone’s attention. There is no clarity on whether there will be business models or products that end up making money sustainably for a long enough period of time. To add to that, compute costs for training and running AI models are massive (AI based search query is seven times more expensive than the existing tech, for example).

We are in a world where companies are chasing net zero [carbon emissions], and the luxury of having chatbots we can talk to through AI is burning a hole through the earth in a data centre. - David Leftley of Bloc Ventures

Plus, there is a real risk of the tech being commoditised as every product gets access to the same (or similar) underlying AI model.

The bright side of swaths of capital being thrown at early stage companies trying to innovate is accelerating the pace of innovation. Some of the largest tech companies of today are a result of that. But a large boom that is eventually followed by a much larger bust sucks capital and talent away at such a pace that it slows down the pace of progress significantly and does more harm than good. People and VCs in Crypto can vouch for that.

In Part II, I’ll try to make a case at some the most likely winners from the AI hype, and if Google’s cash cow - Search, is at risk of being disrupted.

Thanks for reading!

Until next time,

The Atomic Investor

From equities to AI # name of the game @ atomic investor👊

So much effort

finally a write up which gave insights on what AI couldn't or wouldn't do